Demand-Driven Deep Reinforcement Learning for Scalable Fog and Service Placement

- Post by: Hani Sami

- 25 June 2021

- Comments off

Abstract

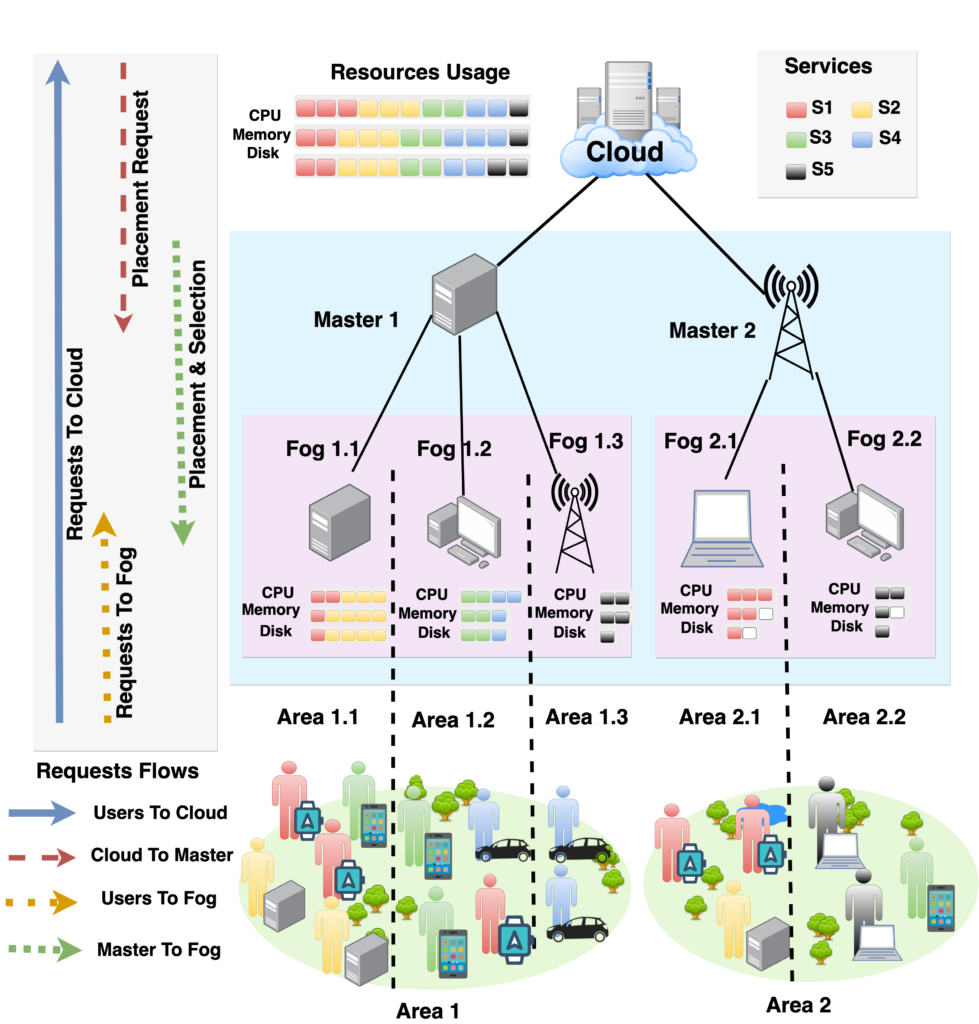

The increasing number of Internet of Things (IoT) devices necessitates the need for a more substantial fog computing infrastructure to support the users’ demand for services. In this context, the placement problem consists of selecting fog resources and mapping services to these resources. This problem is particularly challenging due to the dynamic changes in both users’ demand and available fog resources. Existing solutions utilize on-demand fog formation and periodic container placement using heuristics due to the NP-hardness of the problem. Unfortunately, constant updates of services are time consuming in terms of environment setup, especially when required services and available fog nodes are changing. Therefore, due to the need for fast and proactive service updates to meet users’ demand, and the complexity of the container placement problem, we propose in this paper a Deep Reinforcement Learning (DRL) solution, named Intelligent Fog and Service Placement (IFSP), to perform instantaneous placement decisions proactively. By proactively, we mean making placement decisions before demands occur. The DRL-based IFSP is developed through a scalable Markov Decision Process (MDP) design. To address the long learning time for DRL to converge, and the high volume of errors needed to explore, we also propose a novel end-to-end architecture utilizing a service scheduler and a bootstrapper. on the cloud. Our scheduler and bootstrapper perform offline learning on users’ demand recorded in server logs. Through experiments and simulations performed on the NASA server logs and Google Cluster Trace datasets, we explore the ability of IFSP to perform efficient placement and overcome the above mentioned DRL limitations. We also show the ability of IFSP to adapt to changes in the environment and improve the Quality of Service (QoS) compared to state-of-the-art-heuristic and DRL solutions.

Authors’ Notes

We present in this paper a new scheme utilizing the on-demand fog formation technologies. We present an end-to-end architecture relying on the cloud to perform offline learning. This is achieved by incorporating an Intelligent Fog Service Scheduler (IFSS) and an Intelligent Fog Service Placement (IFSP) on the cloud. IFSS is built using an R-Learning algorithm, which decides on the right time and location where an environment change happens. IFSS is then responsible for triggering IFSP, formulated using DQN, to build the intelligent service placement by receiving data from the cloud server logs. This process is called Bootstrapping. A mature or tuned IFSP model is then pushed to the target fog cluster. Our IFSP then executes online updates on the orchestrator to keep our agent up-to-date with the incoming demand of each service. Benefiting from the integrated load-balancing feature in the Kubernetes orchestration tool, the clusters can scale computing resources automatically when the volume of requests increases and more computation is required.

Building our IFSP requires defining the Markov Decision Process (MDP) components, which are the states, actions, and cost function. Given the change in the services to place, the available resources, the demand for services, and the need for handling large inputs, we present in this work a scalable MDP design for our problem. This design allows the agent to make proactive placement decisions, match the demand of users, and meet the fog environment’s requirements. Using our MDP design, we exploit the use of DQN to build our IFSP agent. Using IFSP, we are able to demonstrate the high utility of the decision made, the power of improving intelligent decisions by adapting to unexpected changes in the environment, and the superiority over the state-of-the-art heuristic solutions. To the best of our knowledge, our work is the first to solve the service placement problem in the fog computing context using DRL.

The contributions of this work are as follows:

- We propose a complete end-to-end architecture benefiting from the on-demand formation approach and introducing the IFSS scheduler and IFSP bootstrapper. This solution avoids the exploration errors and long learning time required by DRL algorithms.

- We present a novel MDP design to build the IFSP agent that respects the change in demand and available resources and takes optimal placement decisions based on the fog environment requirements.

- We exploit the use of DQN to build the IFSP agent. Experiments conducted using real-life datasets demonstrate the ability of IFSP to make efficient and proactive decisions, adapt to changing demand and cost parameters in the environment, and outperform existing heuristic solutions.